Canary deployment strategy

As mentioned before, a canary deployment is a strategy where the operator releases a new version of their application to a small percentage of the production traffic. This small percentage may test the new version and provide feedback. If the new version is working well the operator may increase the percentage, till all the traffic is using the new version.

Generate film-storage APP GitOps Deployment Model

First of all, it is required to review a set of objects in our forked git repository to configure the deployment correctly for deploying our application following a GitOps model.

Please follow the next section to understand the required objects and configurations.

Review and Modify Rollout strategy

As you know so well, the Rollout object controls the application deployment. If you look closely, it is possible to find many similarities between an Argo Rollout object and a Kubernetes Deployment. The main difference between the previous objects referenced is the rollout strategy field.

Please edit the file named ./canary/film-storage-rollout.yaml to define <TIME> as 30s and understand the canary deployment strategy:

strategy:

canary:

analysis:

startingStep: 1

templates:

- templateName: film-storage-analysis-template

steps:

- setWeight: 10

- pause:

duration: <TIME>

- setWeight: 50

- pause:

duration: <TIME>Modify Analysis Template

Please edit the file named ./canary/film-storage-analysis-template.yaml to add the required <USERNAME> that helps to reference the user namespace in the healthcheck URL.

metrics:

- name: film-storage-webmetric

provider:

web:

jsonPath: '{$.status}'

url: >-

http://film-storage.%USER%-canary.svc.cluster.local/api/v1/health

successCondition: result == 'UP'| For more information about AnalysisTemplate, please visit the following link. |

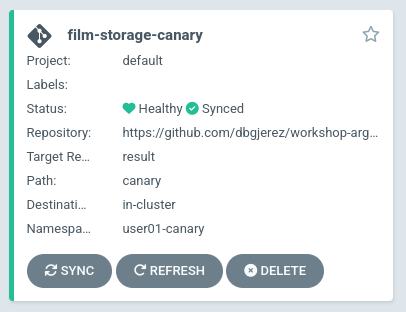

Deploy film-storage ArgoCD Application

We are going to create the application film-storage-canary, which we will use to test canary deployment. Because we will make changes in the application’s GitHub repository, we have to use the repository that you have just forked. Please edit the following .yaml file, and set your own GitHub repository in the repoURL. Create a file called film-storage-canary.yaml inside the argocd folder:

apiVersion: argoproj.io/v1alpha1

kind: Application

metadata:

name: film-storage-canary

namespace: %USER%-gitops-argocd

spec:

destination:

name: ''

namespace: %USER%-canary

server: 'https://kubernetes.default.svc'

source:

path: canary

repoURL: https://github.com/change_me/workshop-argo-rollouts-resources

targetRevision: HEAD

project: default

syncPolicy:

automated:

prune: false

selfHeal: trueoc apply -f argocd/film-storage-canary.yamlLooking at the Argo CD dashboard, you would notice that we have a new film-storage application.

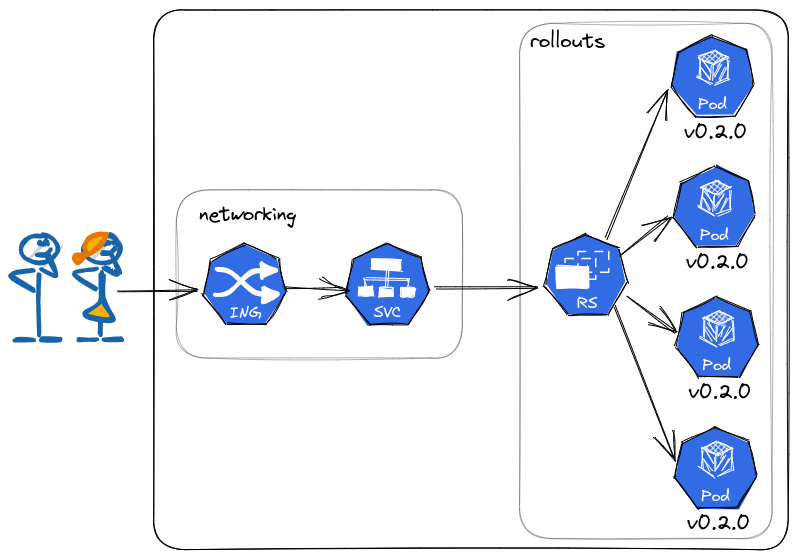

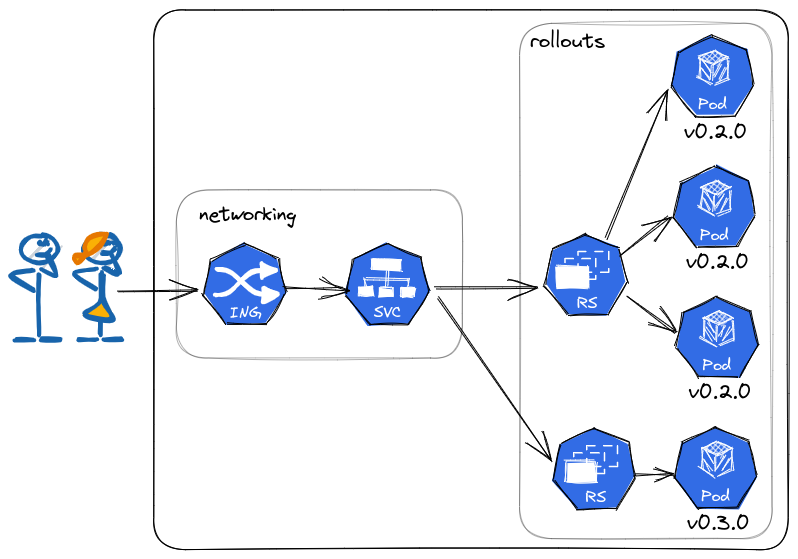

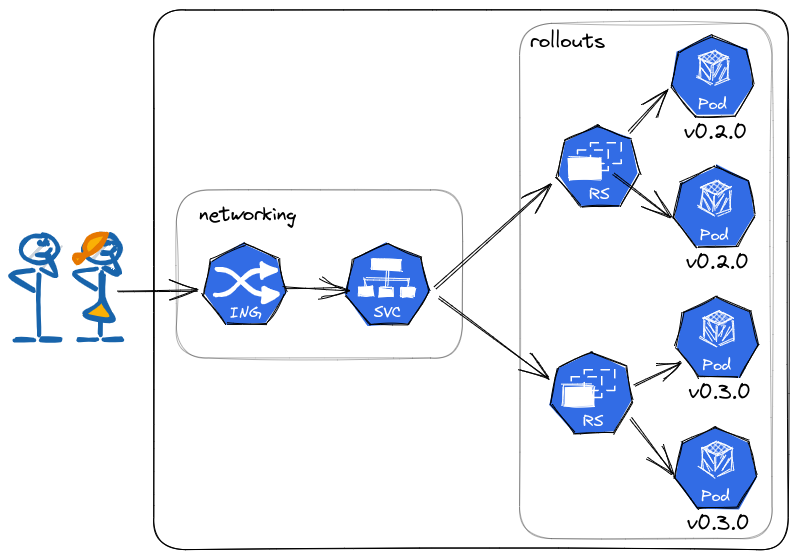

Application architecture

To achieve canary deployment with Cloud Native applications using Argo Rollouts, we have designed this architecture.

OpenShift Components - Online

-

Routes and Services

-

Services mapped to the rollout.

In Blue/Green deployment we always have an offline service to test the version that is not in production. In the case of canary deployment, we do not need it because progressively we will have the new version in production.

We can also see the rollout’s status.

oc argo rollouts get rollout film-storage --watch -n %USER%-canaryNAME KIND STATUS AGE INFO

⟳ film-storage Rollout ✔ Healthy 13m

└──# revision:1

└──⧉ film-storage-644f5f6df7 ReplicaSet ✔ Healthy 13m stable

├──□ film-storage-644f5f6df7-8v25c Pod ✔ Running 13m ready:1/1

├──□ film-storage-644f5f6df7-c6ldl Pod ✔ Running 13m ready:1/1

├──□ film-storage-644f5f6df7-gxxqp Pod ✔ Running 13m ready:1/1

└──□ film-storage-644f5f6df7-l5cvj Pod ✔ Running 13m ready:1/1When a new version is deployed, Argo Rollouts creates a new revision (ReplicaSet). The number of replicas in the new release increases based on the information in the steps, and the number of replicas in the old release decreases by the same number. We have configured a pause duration between each step. To learn more about Argo Rollouts, please read this.

Test film-storage application

We have deployed the film-storage with ArgoCD. We can test that it is up and running.

To test the online service, you can use URL:

curl -s "$(oc get routes film-storage -n %USER%-canary --template='http://{{.spec.host}}')/api/v1/info"

You can use the |

film-storage canary deployment

We have split a Cloud Native Canary deployment into three automatic steps:

-

Deploy canary version for 10%

-

Scale the canary version to 50%

-

Scale the canary version to 100%

This is just an example. The key point here is that very easily we can have the canary deployment that better fits our needs. To make this demo faster we have not set a pause without duration in any step, so Argo Rollouts will go throw each step automatically.

To test canary deployment we are going to do changes in the application.

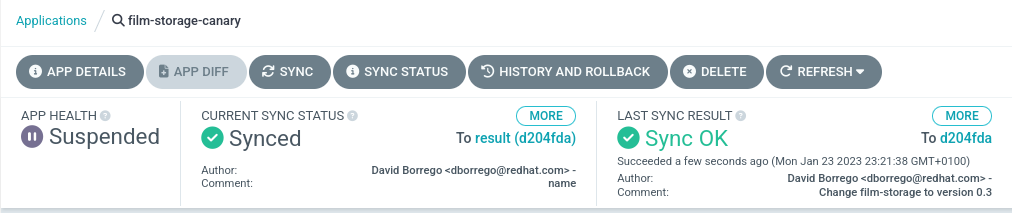

Step 1 - Deploy the canary version for 10%

We will deploy a new version V2.0.0. To do it, we have to edit the files ./canary/film-storage-rollout.yaml to modify the value 0.2 to 0.3:

image: quay.io/dborrego/film-storage:0.3 <---Before applying these changes, it is important to monitor the current version. To track all canary processes, it is required to the following command to make a request every 500ms

watch -n0.5 curl -s "$(oc get routes film-storage -n %USER%-canary --template='http://{{.spec.host}}')/api/v1/info"Argo Rollouts will automatically deploy a new film-storage revision. The canary version will be 10% of the replicas. In this demo, we are not using traffic management. Argo Rollouts makes a best-effort attempt to achieve the percentage listed in the last setWeight step between the new and old version. This means that it will create only one replica in the new revision because it is rounded up. All the requests are load balanced between the old and the new replicas.

Push the changes to start the deployment.

git add .

git commit -m "Change film-storage to version 0.3"

git pushArgoCD will refresh the status after some minutes. If you don’t want to wait you can refresh it manually from ArgoCD UI.

This is our current status:

oc argo rollouts get rollout film-storage --watch -n %USER%-canaryNAME KIND STATUS AGE INFO

⟳ film-storage Rollout ॥ Paused 39m

├──# revision:4

│ ├──⧉ film-storage-7c455c98c8 ReplicaSet ✔ Healthy 8m7s canary

│ │ └──□ film-storage-7c455c98c8-mz9ks Pod ✔ Running 18s ready:1/1

│ └──α film-storage-7c455c98c8-4 AnalysisRun ✔ Successful 13s ✔ 1

├──# revision:3

│ ├──⧉ film-storage-644f5f6df7 ReplicaSet ✔ Healthy 39m stable

│ │ ├──□ film-storage-644f5f6df7-c7j2r Pod ✔ Running 5m39s ready:1/1

│ │ ├──□ film-storage-644f5f6df7-mxrsr Pod ✔ Running 5m4s ready:1/1

│ │ ├──□ film-storage-644f5f6df7-b2c9r Pod ✔ Running 4m31s ready:1/1

│ │ └──□ film-storage-644f5f6df7-z2rxd Pod ✔ Running 4m31s ready:1/1

│ └──α film-storage-644f5f6df7-3 AnalysisRun ✔ Successful 5m34s ✔ 1If we make a lot of requests, we will have the new version in 25% of the requests.

Step 2 - Scale the canary version to 50%

After 30 seconds Argo Rollouts automatically will increase the number of replicas in the new release to 2. Instead of increasing automatically after 30 seconds, we can configure Argo Rollouts to wait indefinitely until that Pause condition is removed. But this is not part of this demo.

This is our current status:

oc argo rollouts get rollout film-storage --watch -n %USER%-canaryNAME KIND STATUS AGE INFO

⟳ film-storage Rollout ॥ Paused 40m

├──# revision:4

│ ├──⧉ film-storage-7c455c98c8 ReplicaSet ✔ Healthy 8m40s canary

│ │ ├──□ film-storage-7c455c98c8-mz9ks Pod ✔ Running 51s ready:1/1

│ │ └──□ film-storage-7c455c98c8-sdbd6 Pod ✔ Running 16s ready:1/1

│ └──α film-storage-7c455c98c8-4 AnalysisRun ✔ Successful 46s ✔ 1

├──# revision:3

│ ├──⧉ film-storage-644f5f6df7 ReplicaSet ✔ Healthy 40m stable

│ │ ├──□ film-storage-644f5f6df7-mxrsr Pod ✔ Running 5m37s ready:1/1

│ │ └──□ film-storage-644f5f6df7-z2rxd Pod ✔ Running 5m4s ready:1/1

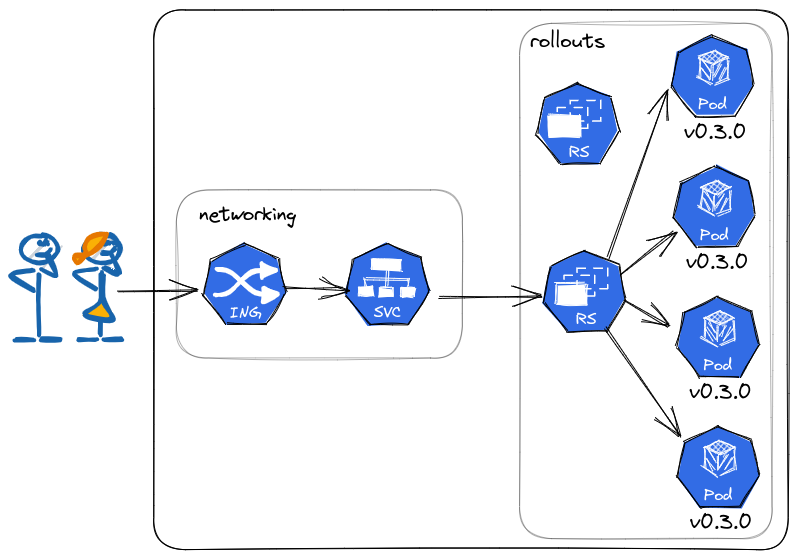

│ └──α film-storage-644f5f6df7-3 AnalysisRun ✔ Successful 6m7s ✔ 1Step 3 - Scale the canary version to 100%

After another 30 seconds, Argo Rollouts will increase the number of replicas in the new release to 4 and scale down the old revision.

This is our current status:

oc argo rollouts get rollout film-storage --watch -n %USER%-canaryNAME KIND STATUS AGE INFO

⟳ film-storage Rollout ✔ Healthy 40m

├──# revision:4

│ ├──⧉ film-storage-7c455c98c8 ReplicaSet ✔ Healthy 9m8s stable

│ │ ├──□ film-storage-7c455c98c8-mz9ks Pod ✔ Running 79s ready:1/1

│ │ ├──□ film-storage-7c455c98c8-sdbd6 Pod ✔ Running 44s ready:1/1

│ │ ├──□ film-storage-7c455c98c8-9m2dp Pod ✔ Running 9s ready:1/1

│ │ └──□ film-storage-7c455c98c8-p4pzq Pod ✔ Running 9s ready:1/1

│ └──α film-storage-7c455c98c8-4 AnalysisRun ✔ Successful 74s ✔ 1

├──# revision:3

│ ├──⧉ film-storage-644f5f6df7 ReplicaSet • ScaledDown 40m

│ └──α film-storage-644f5f6df7-3 AnalysisRun ✔ Successful 6m35s ✔ 1If we review the terminal with the watch command, we’ll look at all the responses with the new version.